Purging content after backing-up and then restoring a database

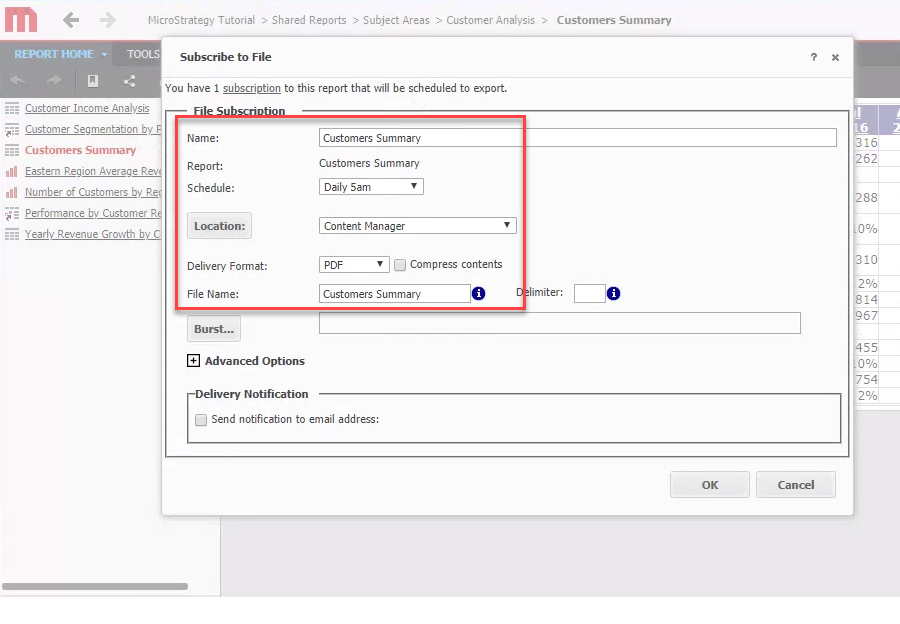

How do we create new datasets that contain just a portion of the content from an existing dataset? I’ve been asked this dozens of times over the years. Someone asked this past week and I figured I’d bundle up my typical method into a powershell script. This script can be run via a scheduled task, allowing you to routinely execute it for such purposes as refreshing development environments, exporting to less secured environments, or to curate training datasets.

Start by first installing the SQL Server powershell tools from an administrative powershell window by running this command:

Install-Module -Name SqlServer

You will be prompted to allow the install to continue.

It will then initiate the download and attempt to install.

In my case, I’ve already got it installed. If you do as well then you’ll see these types of errors, which are safe to ignore.

Now we can start scripting out the process. The first step is to start the backup of the existing database. You can use the Backup-SqlDatabase command. To make things easier we use variables for the key parameters (like instance name, database name, path to the backup file).

# Backup the source database to a new file Write-Information "Backing Up $($sourceDatabaseName)" Backup-SqlDatabase -ServerInstance $serverInstance -Database $sourceDatabaseName -BackupAction Database -CopyOnly -BackupFile $backupFilePath

Next comes the restore of that backup. Unfortunately I cannot use the Restore-SqlDatabase commandlet, because it does not support restoring over databases that have other active connections. Instead I’ll have to run a series of statements that set the database to single-user mode, restores the database (with relocated files), and then sets the database back to multi-user mode.

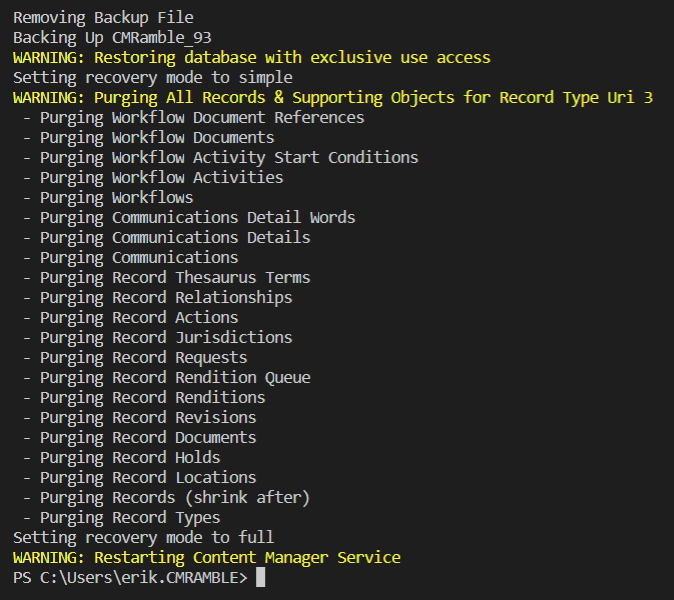

# Restore the database by first getting an exclusive lock with single user access, enable multi-user when done Write-Warning "Restoring database with exclusive use access" $ExclusiveLock = "USE [master]; ALTER DATABASE [$($targetDatabaseName)] SET SINGLE_USER WITH ROLLBACK IMMEDIATE;" $RestoreDatabase = "RESTORE FILELISTONLY FROM disk = '$($backupFilePath)'; RESTORE DATABASE $($targetDatabaseName) FROM disk = '$($backupFilePath)' WITH replace, MOVE '$($sourceDatabaseName)' TO '$($sqlDataPath)\$($targetDatabaseName).mdf', MOVE '$($sourceDatabaseName)_log' TO '$($sqlDataPath)\$($targetDatabaseName)_log.ldf', stats = 5; ALTER DATABASE $($targetDatabaseName) SET MULTI_USER;" ExecuteSqlStatement -Instance $serverInstance -Database $targetDatabaseName -SqlStatement ($ExclusiveLock+$RestoreDatabase)

Next we need to change the recovery mode from full to simple. Doing so will allow us to manage the growth of the log files. This is important because the delete commands we run will spool changes into the database logs, which we’ll need to shrink as often as possible (otherwise the DB server could potentially run out of space).

# Change recovery mode to simple Write-Information "Setting recovery mode to simple" Invoke-Sqlcmd -ServerInstance $serverInstance -Database $targetDatabaseName -Query "ALTER DATABASE $($targetDatabaseName) SET RECOVERY SIMPLE"

With the database in simple recovery mode we can now start purging content from the restored database. Before digging into the logic of the deletes, I’ll need to create a function I can call that traps errors. I’ll also want to be able to incrementally shrink, if necessary.

# Function below is used so that we trap errors and shrink at certain times function ExecuteSqlStatement { param([String]$Instance, [String]$Database, [String]$SqlStatement, [bool]$shrink = $false) $error = $false # Trap all errors try { # Execute the statement Write-Debug "Executing Statement on $($Instance) in DB $($Database): $($SqlStatement)" Invoke-Sqlcmd -ServerInstance $Instance -Database $Database -Query $SqlStatement | Out-Null Write-Debug "Statement executed with no exceptions" } catch [Exception] { Write-Error "Exception Executing Statement: $($_)" $error = $true } finally { } # When no error and parameter passed, shrink the DB (can slow process) if ( $error -eq $false -and $shrink -eq $true ) { ShrinkDatabase -SQLInstanceName $serverInstance -DatabaseName $Database -FileGroupName $databaseFileGroup -FileName $databaseFileName -ShrinkSizeMB 48000 -ShrinkIncrementMB 20 } }

To implement the incremental shrinking I use a method I found a few years ago, linked here. It’s great as it works around the super-slow shrinking process when done on very large datasets. Your database administrator should pay close attention to how it works and align it with your environment.

My goal is to remove all records of certain types, so that they aren’t exposed in the restored copy. Unfortunately the out-of-the-box constraints do not cascade and delete related objects. That means we need to delete them before trying to delete the records.

We need to delete:

Workflows (and supporting objects)

Records (and supporting objects)

Record Types

In very large datasets this process could take hours. You can optimize the performance by adding a “-shrink $true” parameter to any of the delete statements that impact large volumes of data in your org (electronic revisions, renditions, locations for instance).

# Purging content by record type uri foreach ( $rtyUri in $recTypeUris ) { Write-Warning "Purging All Records & Supporting Objects for Record Type Uri $($rtyUri)" Write-Information " - Purging Workflow Document References" ExecuteSqlStatement -Instance $serverInstance -Database $targetDatabaseName -SqlStatement "delete from tswkdocusa where wduDocumentUri in (select uri from tswkdocume where wdcRecordUri in (select uri from tsrecord where rcRecTypeUri=$rtyUri))" Write-Information " - Purging Workflow Documents" ExecuteSqlStatement -Instance $serverInstance -Database $targetDatabaseName -SqlStatement "delete from tswkdocume where wdcRecordUri in (select uri from tsrecord where rcRecTypeUri=$rtyUri)" Write-Information " - Purging Workflow Activity Start Conditions" ExecuteSqlStatement -Instance $serverInstance -Database $targetDatabaseName -SqlStatement "delete from tswkstartc where wscActivityUri in (select uri from tswkactivi where wacWorkflowUri in (select uri from tswkworkfl where wrkInitiator in (select uri from tsrecord where rcRecTypeUri=$rtyUri)))" Write-Information " - Purging Workflow Activities" ExecuteSqlStatement -Instance $serverInstance -Database $targetDatabaseName -SqlStatement "delete from tswkactivi where wacWorkflowUri in (select uri from tswkworkfl where wrkInitiator in (select uri from tsrecord where rcRecTypeUri=$rtyUri))" Write-Information " - Purging Workflows" ExecuteSqlStatement -Instance $serverInstance -Database $targetDatabaseName -SqlStatement "delete from tswkworkfl where wrkInitiator in (select uri from tsrecord where rcRecTypeUri=$rtyUri)" Write-Information " - Purging Communications Detail Words" ExecuteSqlStatement -Instance $serverInstance -Database $targetDatabaseName -SqlStatement "delete from tstranswor where twdTransDetailUri in (select tstransdet.uri from tstransdet inner join tstransmit on tstransdet.tdTransUri = tstransmit.uri inner join tsrecord on tstransmit.trRecordUri = tsrecord.uri where tsrecord.rcRecTypeUri = $rtyUri);" Write-Information " - Purging Communications Details" ExecuteSqlStatement -Instance $serverInstance -Database $targetDatabaseName -SqlStatement "delete from tstransdet where tdTransUri in (select tstransmit.uri from tstransmit inner join tsrecord on tstransmit.trRecordUri = tsrecord.uri where rcRecTypeUri=$rtyUri);" Write-Information " - Purging Communications" ExecuteSqlStatement -Instance $serverInstance -Database $targetDatabaseName -SqlStatement "delete from tstransmit where trRecordUri in (select uri from tsrecord where rcRecTypeUri=$rtyUri);" Write-Information " - Purging Record Thesaurus Terms" ExecuteSqlStatement -Instance $serverInstance -Database $targetDatabaseName -SqlStatement "delete from tsrecterm where rtmRecordUri in (select uri from tsrecord where rcRecTypeUri=$rtyUri);" Write-Information " - Purging Record Relationships" ExecuteSqlStatement -Instance $serverInstance -Database $targetDatabaseName -SqlStatement "delete from tsreclink where rkRecUri1 in (select uri from tsrecord where rcRecTypeUri=$rtyUri) OR rkRecUri2 in (select uri from tsrecord where rcRecTypeUri=$rtyUri);" Write-Information " - Purging Record Actions" ExecuteSqlStatement -Instance $serverInstance -Database $targetDatabaseName -SqlStatement "delete from tsrecactst where raRecordUri in (select uri from tsrecord where rcRecTypeUri=$rtyUri);" Write-Information " - Purging Record Jurisdictions" ExecuteSqlStatement -Instance $serverInstance -Database $targetDatabaseName -SqlStatement "delete from tsrecjuris where rjRecordUri in (select uri from tsrecord where rcRecTypeUri=$rtyUri);" Write-Information " - Purging Record Requests" ExecuteSqlStatement -Instance $serverInstance -Database $targetDatabaseName -SqlStatement "delete from tsrecreque where rqRecordUri in (select uri from tsrecord where rcRecTypeUri=$rtyUri);" Write-Information " - Purging Record Rendition Queue" ExecuteSqlStatement -Instance $serverInstance -Database $targetDatabaseName -SqlStatement "delete from tsrendqueu where rnqRecUri in (select uri from tsrecord where rcRecTypeUri=$rtyUri);" Write-Information " - Purging Record Renditions" ExecuteSqlStatement -Instance $serverInstance -Database $targetDatabaseName -SqlStatement "delete from tsrenditio where rrRecordUri in (select uri from tsrecord where rcRecTypeUri=$rtyUri);" Write-Information " - Purging Record Revisions" ExecuteSqlStatement -Instance $serverInstance -Database $targetDatabaseName -SqlStatement "delete from tserecvsn where evRecElecUri in (select uri from tsrecord where rcRecTypeUri=$rtyUri);" Write-Information " - Purging Record Documents" ExecuteSqlStatement -Instance $serverInstance -Database $targetDatabaseName -SqlStatement "delete from tsrecelec where uri in (select uri from tsrecord where rcRecTypeUri=$rtyUri);" Write-Information " - Purging Record Holds" ExecuteSqlStatement -Instance $serverInstance -Database $targetDatabaseName -SqlStatement "delete from tscasereco where crRecordUri in (select uri from tsrecord where rcRecTypeUri=$rtyUri);" Write-Information " - Purging Record Locations" ExecuteSqlStatement -Instance $serverInstance -Database $targetDatabaseName -SqlStatement "delete from tsrecloc where rlRecUri in (select uri from tsrecord where rcRecTypeUri=$rtyUri);" Write-Information " - Purging Records (shrink after)" ExecuteSqlStatement -Instance $serverInstance -Database $targetDatabaseName -SqlStatement "delete from tsrecord where rcRecTypeUri = $rtyUri" -shrink $true Write-Information " - Purging Record Types" ExecuteSqlStatement -Instance $serverInstance -Database $targetDatabaseName -SqlStatement "delete from tsrectype where uri = $rtyUri" }

With that out of the way we can now restore the recovery mode back to full.

# Change recovery mode to simple Write-Information "Setting recovery mode to full" Invoke-Sqlcmd -ServerInstance $serverInstance -Database $targetDatabaseName -Query "ALTER DATABASE $($targetDatabaseName) SET RECOVERY FULL"

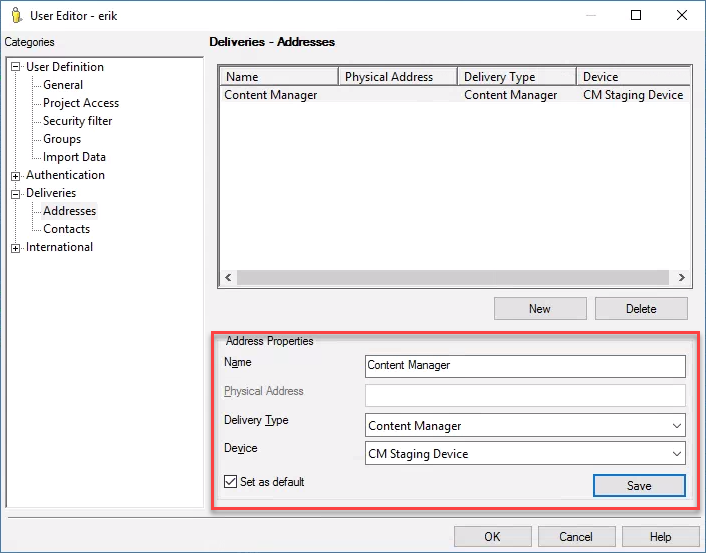

Last step is to restart the workgroup service on the server using this restored database.

# Restart CM Write-Warning "Restarting Content Manager Service" Restart-Service -Force -Name $cmServiceName

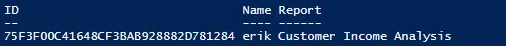

At the top of my script I have all of the variables defined. To make this work for you, you’ll need to adjust the variables to align with your environment. For instance, you’ll need to update the rtyUris array to contain the URIs of those record types you want to have deleted.

# Variables to be used throughout the script $serverInstance = "localhost" # SQL Server instance name $sourceDatabaseName = "CMRamble_93" # Name of database in SQL Server $targetDatabaseName = "Restored_cmramble_93" # Name to restore to in SQL Server $backupFileName = "$($sourceDatabaseName).bak" # File name for backup of database $backupPath = "C:\\temp" # Folder to back-up into (relative to server) $backupFilePath = [System.IO.Path]::Combine($backupPath, $backupFileName) # Use .Net's path join which honors OS $databaseFileGroup = "PRIMARY" # File group of database content, used when shrinking $databaseFileName = "CMRamble_93" # Filename within file group, used when shrinking $sqlDataPath = "D:\Program Files\Microsoft SQL Server\MSSQL13.MSSQLSERVER\MSSQL\DATA" # Path to data files, used in restore $cmServiceName = "TRIMWorkgroup" # Name of registered service in OS (not display name) $recTypeUris = @( 3 ) # Array of uri's for record types to be purged after restore

To find the URIs of your record types, you’ll need to customize your view pane and add in the unique identifier property.

Running the script gives me this output….

On my local machine it took ~1 minute to complete for a super small dataset. When running this on a 70 GB file, with me removing approximately 20 GB of content, it takes 15 minutes. Though my SQL Server has 64 GB of RAM and two SDD drives that hold the SQL Server data & log files.

You can download my full script here: https://github.com/aberrantCode/cm_db_restore_and_purge