Content Manager 9.2 Nuggets

Here's a collection of changes introduced with 9.2....

IDOL is gone, sort of

For the end-users absolutely nothing has changed. They will still search like they always have done. However, document content indexing should now be implemented with ElasticSearch. If you aren't on the bandwagon with that, you've probably invested in other uses for IDOL. You can still configure 9.2 to use IDOL if you need. However, for everyone else ElasticSearch is the future.

You can see that both are still referenced on the administration ribbon. In my environment I have no IDOL components, but the toolbar options are still there. I'll happily ignore the IDOL ones.

Elasticsearch build-out

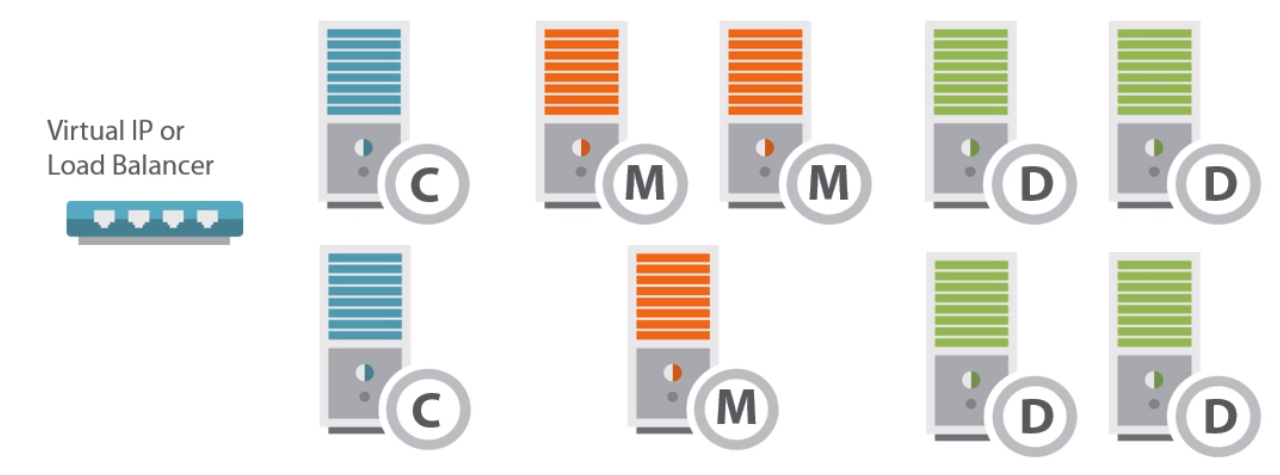

You can quickly find yourself unsure how to resource this new technology. The course I took quickly rationalized 9 servers and over 128GB RAM for a database of just 1 million documents. Below is the diagram used for that example. Although entirely unrealistic I'm sure, it highlights what's meant by "elastic".

As time permits I'll post more about how to properly design an ElasticSearch infrastructure. KeyView is still used to generate the content for the engine, and the engine is managed via rest commands. However, unlike IDOL, there are really cool user interfaces for managing it (some at a minor cost).

Navigating via Keyboard

When working with tree views you can no longer use arrow keys to expand/collapse a tree. For example when trying to expand a category....

Navigating categories

To expand this you can press + or -. Alternatively, you can press control + asterisk on the numeric keypad. Doing so will expand the structure recursively. As noted in the comments, a bug report has been raised for this issue.

Webclient Record Nesting

You can now expand a container and see the contents. You can even expand contents and continue drilling down.

Webclient Tabs

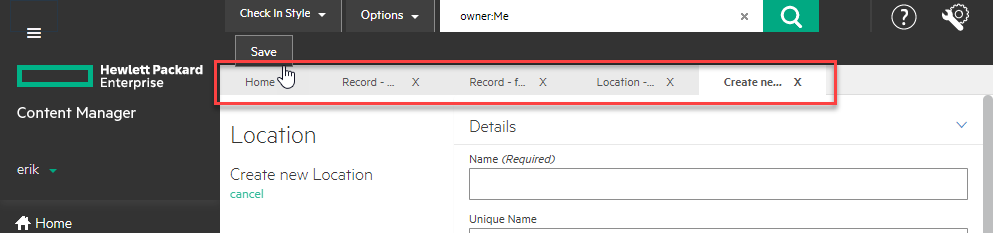

Tabs now appear as you perform actions. You could have a search result in one tab, a new location in another, and saved searches in a third. I do not see any option to have tabs auto-open at startup (like the thick client has).

Metadata Validation Rules

There's a new feature that aims to solve some of the validation challenges we have with metadata. You can find the feature off the administration ribbon...

Right-click->New to create a new one

Here's the configuration dialog...

Silly me tries something silly....

Does not compute...

The help file says...

LookupSet Changes

Many new options with this one. Here's the property page for a "States" lookup set. Note how I've enabled both short and long values on the items.

With this configuration the lookup item property page looks like below...

Both Short and Long Value

Here's what a few entries look like with comments enabled...

Hierarchical Lookup Items

I created a lookup set name "Old Projects" and defined it as follows:

Then I created a few entries, with a few of those having sub-entries.

Here's how it appears to the end-users...

Error message shown if the user doesn't pick a "lowest level" item.

Once a valid value is selected, this is how it is saved into the field....

Lookup Items in Custom Properties

You can now pick Lookup Item when selecting the format of a custom property, as shown below...

Then you pick the lookup set from which the user will pick one item...

Then you give it a name...

For demonstration purposes I'll associate it with categories...

Before demonstrating it, I created another custom property of type string. I made this field use the same lookup set as its' source. Then I also associated it with the category object.

Next I selected Florida for both fields on an example category (the user experience was identical when selecting a value)...

After saving, I can see one major difference in the view pane....

The Lookup Item is a hyperlink whereas the string is just text.

Regardless which route you select, modifications to the Lookup Item are reflected across the dataset. In the screenshot below you can see how the selected state on the category shows a new value, even though I only updated the Lookup Item (and not the category).

Change is reflected even though I didn't modify category

SQL Database Changes

Schema Changes:

1 table removed, 3 views removed, 5 tables added, and two new encryption functions

Across the board it appears all VARCHAR type columns have now been converted to NVARCHAR. Below you can see some of the results of a schema comparison. I've highlighted the example column.

Check In Style Email Clean-up Option

In the previous version you could "Keep email in the mail system" and/or "Move deleted email to Deleted Items" (or neither). Now you can only select one of these three options: Permanent Delete, Move to Deleted Items, Retain in Mail System.

9.2 Options

9.1 Options

Check-in Styles Link off Administration Ribbon

Administrators can gain access to all check-in styles from here...

Classification/Category Specific Record Types

Limit which record types can be associated with classification/category...

If a user attempts to pick it they get an error...

Automatically Declare as Final

I would think this makes sense for email, but here's what the help says....

“If the document store is enabled for SEC compliance then this option will be enabled, see About SEC Rule 17a-4 Compliance rules. Whenever a record is created that has a document attached, the record will be automatically finalized at the time it is saved to the database. If you attach a document to an existing record that doesn’t have a document attached, then that record will be finalized. The options for creating revisions are prevented.”

Here's the option on the record type:

Default Copy Style for Referenced Access Controls

When creating a record type or classification you can define the behavior of referenced access controls. How you go about accessing the options has changed. Clicking copy style shows you a popup.

In the previous version you accessed it from a second tab...

Update Last Action Date when Viewing

In previous versions there was a global setting that controlled whether the last action date is to be updated when viewing a record. This concept has now been moved to the record type level. There is no longer a global setting for this behavior.

Confirm Preview of Document

When enabled the user must click anywhere within the preview window, confirming their intention to view the record. This was always a personal concern as an administrator may accidentally leave the preview pane enabled whilst they peruse search results (with no intention or desire to actually see the record).

Toggle this setting on the record type's audit property page

Feature Activation Required

If you attempt to enable the External Stores, Manage In Place, EMC Storage, or iTernity Content Addressable Storage features, you'll be prompted for an activation key for each...

Render to PDF after save

This one has been here for a few versions I guess, but I just noticed it...