Automatically Creating Alerts for Users

A question over on the forum about the possibility of having a notification sent to users when they've been selected in a custom property. For instance, maybe when a request for information is registered I want to alert someone. Whomever is selected should get a notice.

This behavior is really what the "assignee" feature is meant to handle. Users use their in-tray to see these newly created items. However, the email notification options related to that feature are fairly limited. If I, as the user, create an alert then I can receive a notification that a record has been created and assigned to me.

Here are the properties for the alert...

Now the challenge here is that administrators cannot access alerts for other users. We could tell each user to do this themselves, but then I wouldn't have much to write about. So instead I create another user and craft a script.

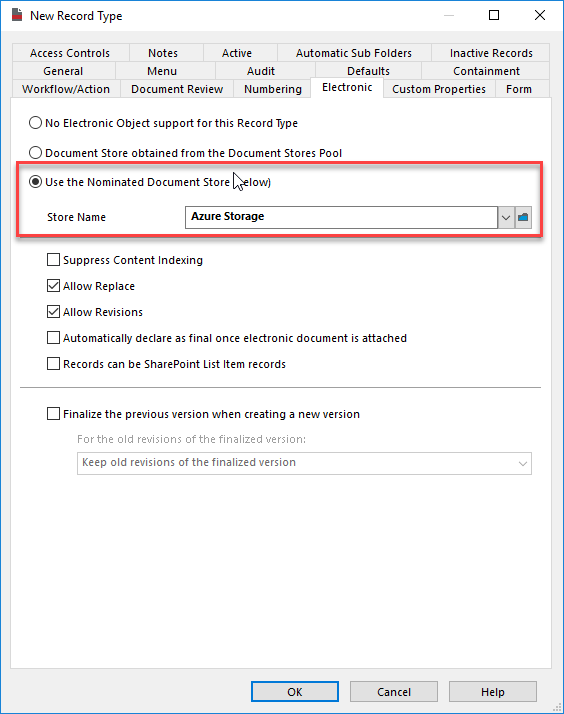

The script needs to find all users that can login and create an alert for each user not having one. To do that we'll have to impersonate those users. That requires you either run this as the service account or you add the impersonator to the enterprise studio options. You can see below the option I'm referencing.

This is how it appears after you've added the account...

Now I need a powershell script that finds all of the users and then connects as that user to perform the search for an alert. If it can't find an alert then it creates a new one in the impersonated dataset. This script can then be scheduled to run once every evening. The audit logs will show that this impersonation happened, so there should be no concern regarding security.

Clear-Host $LocationCustomPropertyName = "Alert Location" Add-Type -Path "D:\Program Files\Hewlett Packard Enterprise\Content Manager\HP.HPTRIM.SDK.dll" $Database = New-Object HP.HPTRIM.SDK.Database $Database.Connect() $LocationCustomProperty = [HP.HPTRIM.SDK.FieldDefinition]$Database.FindTrimObjectByName([HP.HPTRIM.SDK.BaseObjectTypes]::FieldDefinition, $LocationCustomPropertyName) #Find all locations that can login $Users = New-Object HP.HPTRIM.SDK.TrimMainObjectSearch -ArgumentList $Database, Location $Users.SearchString = "login:* not uri:$($Database.CurrentUser.Uri)" Write-Host "Found $($Users.Count) users" foreach ( $User in $Users ) { try { #Impersonate this user so we can find his/her alerts $TrustedDatabase = New-Object HP.HPTRIM.SDK.Database $TrustedDatabase.TrustedUser = $User.LogsInAs $TrustedDatabase.Connect() Write-Host "Connected as $($TrustedDatabase.CurrentUser.FullFormattedName)" #formulate criteria string for this user $CriteriaString = "$($LocationCustomProperty.SearchClauseName):[default:me]" #search using impersonated connection $Alerts = New-Object HP.HPTRIM.SDK.TrimMainObjectSearch $TrustedDatabase, Alert $Alerts.SearchString = "user:$($User.Uri) eventType:added" #can't search on criteria so have to inspect each to see if already exists Write-Host "User $(([HP.HPTRIM.SDK.Location]$User).FullFormattedName) has $($Alerts.Count) alerts" $AlertExists = $false foreach ( $Alert in $Alerts ) { if ( ([HP.HPTRIM.SDK.Alert]$Alert).Criteria -eq $CriteriaString ) { $AlertExists = $true } } #when not existing we create it if ( $AlertExists -eq $false ) { $UserAlert = New-Object HP.HPTRIM.SDK.Alert -ArgumentList $TrustedDatabase $UserAlert.Criteria = $CriteriaString #$UserAlert.ChildSubscribers.NewSubscriber($TrustedDatabase.CurrentUser) $UserAlert.Save() Write-Host "Created an alert for $($User.FullFormattedName)" } else { Write-Host "Alert found for $($User.FullFormattedName)" } } catch [HP.HPTRIM.SDK.TrimException] { Write-Host "$($_)" } }

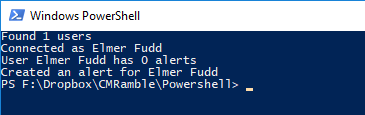

When I run the script I get these results....

Ah! When I created Elmer I didn't give him an email address. I could either update this script to automatically create email addresses for users, simply exclude them from the initial search for users (by adding "email:*" to the search string), or by sending a log of this script to an email address for manual rectification.

For now I manually fix it and run the script again.

If I run it a second time I can see it doesn't re-create it for him.

Success! Now just schedule this to run on a server once every day and you have a viable solution to the posted question. Before implementation you might adjust the script to only target those users who would ever be selected for the custom property (if that's possible).