Automating movement to cheaper storage

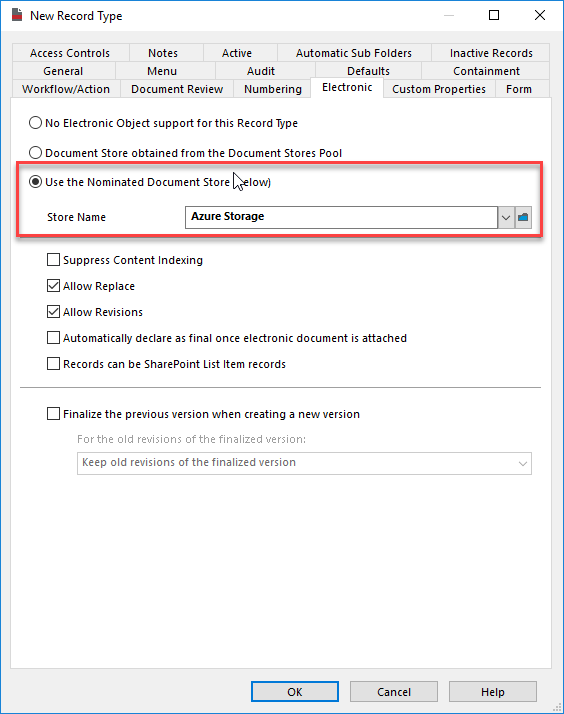

Now that I have an Azure Blob store configured, I want to have documents moved there after they haven't been used for a while. I've previously shown how to do this manually within the client, but now I'll show how to automate it.

The script is very straight-forward and follows these steps:

- Find the target store in CM

- Find all documents to be moved

- Move each document to the target store

Here's an implementation of this within powershell:

Clear-Host Add-Type -Path "D:\Program Files\Hewlett Packard Enterprise\Content Manager\HP.HPTRIM.SDK.dll" $LocalStoreName = "Main Document Store" $AzureStoreName = "Azure Storage" $SearchString = "store:$($LocalStoreName) and accessedOn<Previous Year" $Database = New-Object HP.HPTRIM.SDK.Database $Database.Connect() #fetch the store and exit if missing $Tier3Store = $Database.FindTrimObjectByName([HP.HPTRIM.SDK.BaseObjectTypes]::ElectronicStore, $AzureStoreName) if ( $Tier3Store -eq $null ) { Write-Error "Unable to find store named '$($AzureStoreName)'" exit } #search for records eligible for transfer $Records = New-Object HP.HPTRIM.SDK.TrimMainObjectSearch -ArgumentList $Database, Record $Records.SearchString = $SearchString Write-Host "Found $($Records.Count) records" $x = 0 #transfer each record foreach ( $Result in $Records ) { $Record = [HP.HPTRIM.SDK.Record]$Result $record.TransferStorage($Tier3Store, $true) Write-Host "Record $($Record.Number) transfered" $x++ }

I ran it to get the results below. I forced it to stop after the first record for demonstration purposes, but you should get the idea.