Adding Geolocation to my Json Import

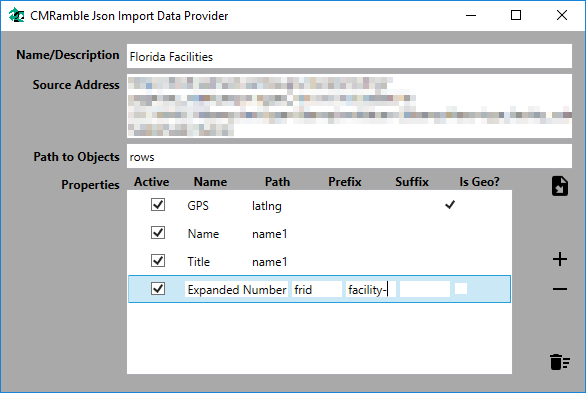

My initial import just mapped the facility ID into the expanded record number property and the name into the title. I can see from the Json response that there is a "latlng" property I can import. It has two real numbers separated by a comma. If I map that to the GPS Location field within Content Manager, I get this error message:

Details: Setting property failed. Item Index: 295, Property Caption: 'GPS Location', Value: 27.769625, -82.767725 Exception message: You have not entered a correct geographic location. Try POINT(-122.15437906 37.443134073) or use the map interface to mark a point.

Funny how DataPort is so demanding with regards to import formats. Even funnier that it gives you no capability to transform data during "port". I'll need to add some features into my Json import data formatter: add value prefix, suffix, and a geolocation converter feature to each property.

I'll use the prefix in record numbers moving forward (everyone does that). I'll use suffixes possibly in record numbers, but more likely on record titles (inserting a name, facility, region, etc, in title). I'll use a dodgy static mapping for the geolocation converter (whatever gets my current data into the POINT structure).

Now when I import from this json source I'll have additional record number protections and an importable geolocation. Also notice that I'm exposing two properties to Content Manager: name and title. Both of these point to the "name1" property of the original source. Since DataPort only allows you to match one column to one source, you cannot re-use an import source property. In my example I want to push a copy of the original value into a second additional field. Having this flexibility gives me just what I need.