Generating Custom PDF Thumbnails

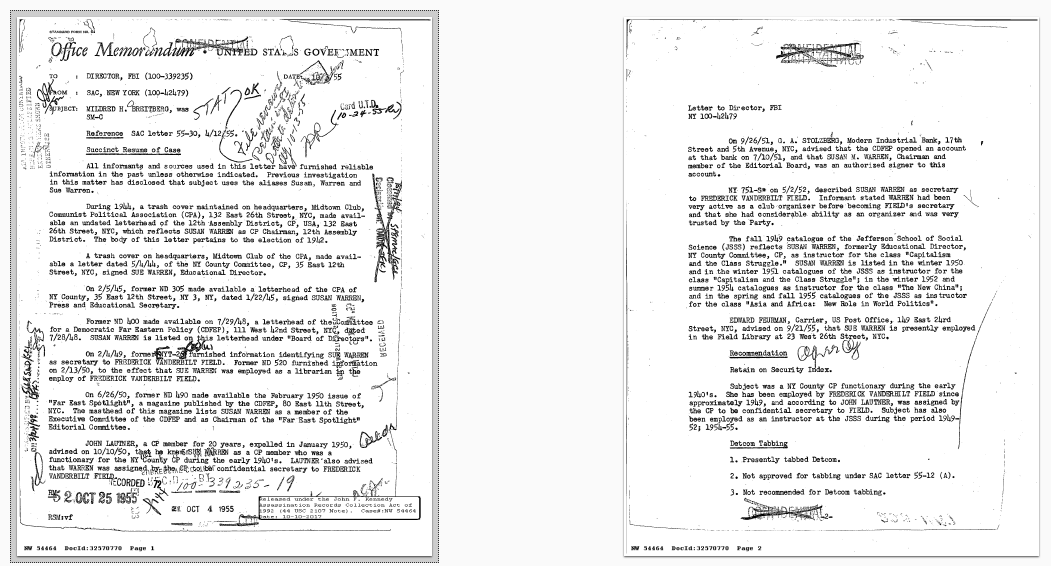

I noticed that many of the JFK documents have a meta-data cover sheet. As shown in the image below, more than half include one. In my custom user interface I'm wanting to embed a thumbnail of the document when the user is perusing search results. These cover sheets render my thumbnails useless.

Powershell to the rescue again! First I crafted a script that simply extracted the first page of each PDF....

$sourceFolder = "F:\Dropbox\CMRamble\JFK\docs_done" $pngRoot = "F:\Dropbox\CMRamble\JFK\docs_done\pngs\" $files = Get-ChildItem -Path $sourceFolder -Filter "*.pdf" | Where-Object { $_.Name.EndsWith('-ocr.pdf') -eq $false } foreach ( $file in $files ) { $fileName = "$($sourceFolder)\$($file.Name)" &pdftopng -f 1 -l 1 -r 300 "$fileName" "$pngRoot" Get-ChildItem -Path $pngRoot -Filter "*.png" | ForEach-Object { $newImageName = "$($sourceFolder)\$([System.IO.Path]::GetFileNameWithoutExtension($fileName))$($_.Name)" Move-Item $_.FullName $newImageName } }

After it finished running I was able to compare the documents. Some of the documents do not have cover sheets. It would be quickest to use the second page from all documents, but for those without cover sheets I find that the first page is more often a better representation.

Example document without cover sheet -- I would rather see the first page in the thumbnail

Example document with cover sheet -- I would rather see the second page in the thumbnail

I found that most of the cover sheets were 100KB or less in size. Although I'm sure a few documents without cover sheets might have their first page less than that size, I'm comfortable using this as a dividing line. I'll update the powershell to process the second page if the first is too small.

After I run it I get these results...

Thumbnails selected based on extracted image size (cover sheets are mostly white space and therefore smaller in size)

Sweet! Last thing I need is a powershell script that I can run against my Content Manager records. I'll have it find all PDFs, extract the original rendition, apply this thumbnail logic, and then attach a thumbnail rendition. My user interface will then directly link to the thumbnail rendition.

Clear-Host Add-Type -Path "D:\Program Files\Hewlett Packard Enterprise\Content Manager\HP.HPTRIM.SDK.dll" $db = New-Object HP.HPTRIM.SDK.Database $db.Connect $tmpFolder = "$([System.IO.Path]::GetTempPath())\cmramble" if ( (Test-Path $tmpFolder) -eq $false ) { New-Item -Path $tmpFolder -ItemType Directory } Write-Progress -Activity "Generating PDF Thumbnails" -Status "Searching for Records" -PercentComplete 0 $records = New-Object HP.HPTRIM.SDK.TrimMainObjectSearch -ArgumentList $db, Record $records.SearchString = "extension:pdf" $x = 0 foreach ( $result in $records ) { $x++ Write-Progress -Activity "Generating PDF Thumbnails" -Status "Record # $($record.Number)" -PercentComplete (($x/$records.Count)*100) $record = [HP.HPTRIM.SDK.Record]$result $hasThumbnail = $false $localFileName = $null for ( $i = 0; $i -lt $record.ChildRenditions.Count; $i++ ) { $rendition = $record.ChildRenditions.getItem($i) if ( $rendition.TypeOfRendition -eq [HP.HPTRIM.SDK.RenditionType]::Thumbnail ) { #$rendition.Delete() #$record.Save() $hasThumbnail = $true } elseif ( $rendition.TypeOfRendition -eq [HP.HPTRIM.SDK.RenditionType]::Original ) { $extract = $rendition.GetExtractDocument() $extract.FileName = "$($record.Uri).pdf" $extract.DoExtract("$($tmpFolder)", $true, $false, $null) $localFileName = "$($tmpFolder)\$($record.Uri).pdf" } } #extract the original rendition if ( ($hasThumbnail -eq $false) -and ([String]::IsNullOrWhiteSpace($localFileName) -eq $false) -and (Test-Path $localFileName)) { #get a storage spot for the image(s) $pngRoot = "$($tmpFolder)\$($record.Uri)\" if ( (Test-Path $pngRoot) -eq $false ) { New-Item -ItemType Directory -Path $pngRoot | Out-Null } #extract the first image &pdftopng -f 1 -l 1 -r 300 "$localFileName" "$pngRoot" 2>&1 | Out-Null $firstPages = Get-ChildItem -Path $pngRoot -Filter "*.png" foreach ( $firstPage in $firstPages ) { $newImageName = "$($tmpFolder)\$([System.IO.Path]::GetFileNameWithoutExtension($localFileName))$($firstPage.Name)" if ( $firstPage.Length -le 102400 ) { #get second page Remove-Item $firstPage.FullName -Force &pdftopng -f 2 -l 2 -r 300 "$fileName" "$pngRoot" $secondPages = Get-ChildItem -Path $pngRoot -Filter "*.png" foreach ( $secondPage in $secondPages ) { $record.ChildRenditions.NewRendition($secondPage.FullName, [HP.HPTRIM.SDK.RenditionType]::Thumbnail, "PSImaging PNG") | Out-Null $record.Save() Remove-Item $secondPage.FullName -Force } } else { #use the first page $record.ChildRenditions.NewRendition($firstPage.FullName, [HP.HPTRIM.SDK.RenditionType]::Thumbnail, "PSImaging PNG") | Out-Null $record.Save() Remove-Item $firstPage.FullName -Force } } Remove-Item $pngRoot -Recurse -Force Write-Host "Generated Thumbnail for $($record.Number)" } else { Write-Host "Skipped $($record.Number)" } Remove-Item $localFileName }

If I visit my user interface I can see the results first-hand: