Ensuring Records Managers can access Microstragegy

I've got a MicroStragtegy server environment with a Records Management group. I'd like to ensure that certain new CM users always have access to MSTR, so that they have appropriate access to dashboards and reports. For simplicity of the post I'll focus just on CM administrators.

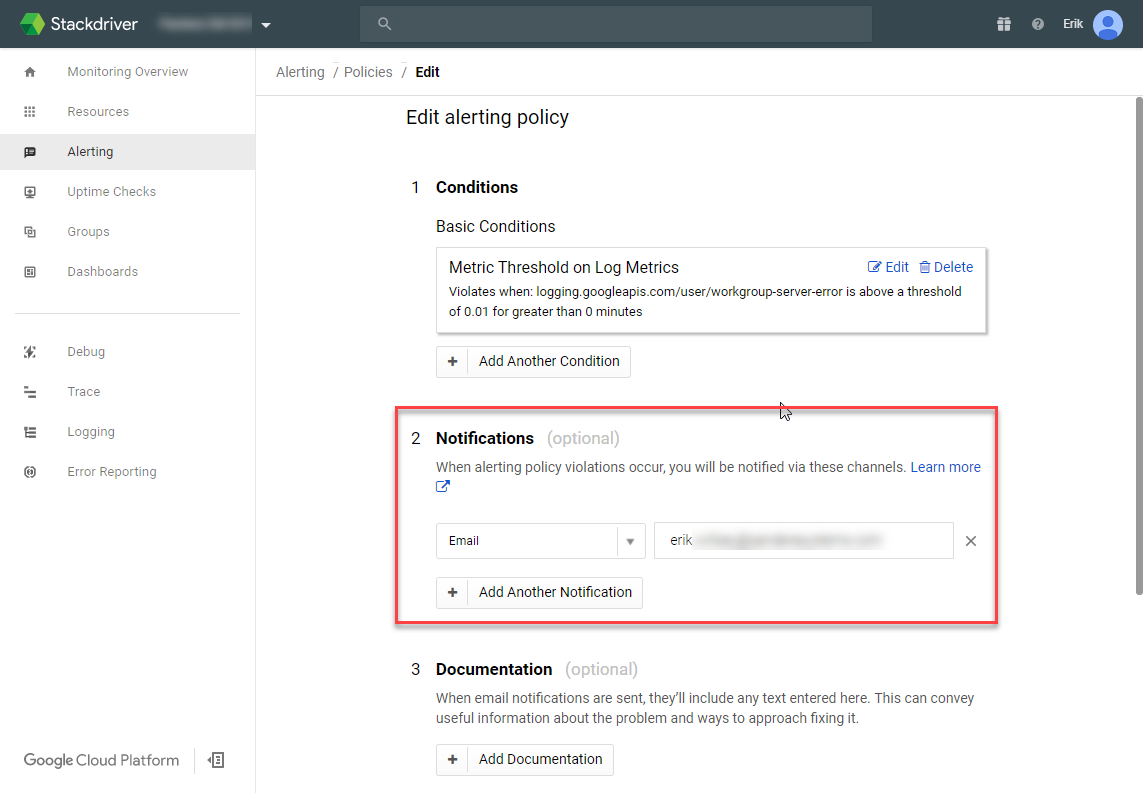

Within MicroStrategy I'd like to create a new user and include them in a "Records Management" group, like so:

This implementation of MSTR does not have an instance of the REST API available, so I'm limited to using the command manager. My CM instance is in the cloud and I won't be allowed to install the CM client on the MSTR server. To bridge that divide I'll use a powershell script that leverages the ServiceAPI and Invoke-Expression cmdlet.

First I need a function that gets me a list of CM users:

function Get-CMAdministrators { Param($baseServiceApiUri) $queryUri = ($baseServiceApiUri + "/Location?q=userType:administrator&pageSize=100000&properties=LocationLogsInAs,LocationLoginExpires,LocationEmailAddress,LocationGivenNames,LocationSurname") $AllProtocols = [System.Net.SecurityProtocolType]'Ssl3,Tls,Tls11,Tls12' [System.Net.ServicePointManager]::SecurityProtocol = $AllProtocols $headers = @{ Accept = "application/json" } $response = Invoke-RestMethod -Uri $queryUri -Method Get -Headers $headers -ContentType "application/json" Write-Debug $response if ( $response.TotalResults -gt 0 ) { return $response.Results } return $null }

Second I need a function that creates a user within MSTR:

function New-MstrUser { Param($psn, $psnUser, $psnPwd, $userName,$fullName,$password,$group) $command = "CREATE USER `"$userName`" FULLNAME `"$fullName`" PASSWORD `"$password`" ALLOWCHANGEPWD TRUE CHANGEPWD TRUE IN GROUP `"$group`";" $outFile = "" $logFile = "" try { $outFile = New-TemporaryFile Add-Content -Path $outFile $command $logFile = New-TemporaryFile $cmdmgrCommand = "cmdmgr -n `"$psn`" -u `"$psnUser`" -f `"$outFile`" -o `"$logFile`"" iex $cmdmgrCommand } catch { } if ( (Test-Path $outFile) ) { Remove-Item $outFile -Force } if ( (Test-Path $logFile) ) { Remove-Item $logFile -Force } }

Last step is to tie them together:

$psn = "MicroStrategy Analytics Modules" $psnUser = "Administrator" $psnPwd = "" $recordsManagemenetGroupName = "Records Management" $baseUri = "http://10.0.0.1/HPECMServiceAPI" $administrators = Get-CMAdministrators -baseServiceApiUri $baseUri if ( $administrators -ne $null ) { foreach ( $admin in $administrators ) { New-MstrUser -psn $psn -psnUser $psnUser -psnPwd $psnPwd -userName $admin.LocationLogsInAs.Value -fullName ($admin.LocationSurName.Value + ', ' + $admin.LocationGivenNames.Value) -password $admin.LocationLogsInAs.Value -group $recordsManagemenetGroupName } }

After running it, my users are created!