Export Mania 2017 - Record Addin

This is the third of four posts trying to tackle how to achieve the export of a meta-data file along with electronic documents. We need/want to have the electronic documents to have the record number in the file names, instead of the standard (read: oddball) naming conventions of the various features. In this post I'll show how to create a custom record addin that achieves the requirement.

So let's dive right on in!

I created a new C# Class library, imported the CM .Net SDK (HP.HPTRIM.SDK), and created an Export class that will implement the ITrimAddin interface.

Next I'll use the Quick Action feature of Visual Studio to implement the interface. It generates all of the required members and methods, but with exceptions for each. I immediately reorganized what was generated and update it so that it does not throw exceptions.

Collapsed appearance of the class

I find it helpful to organize the members and methods into regions reflective of the features & functionality. For this particular add-in I will ignore the "Save and Delete Events" and "Field Customization" regions. Currently my private members and public properties regions look like shown below.

#region Private members private string errorMessage; #endregion #region Public Properties public override string ErrorMessage => errorMessage; #endregion

If I expand my Initialization region I see two methods: Initialise and Setup. Initialise is invoked the first time the add-in is loaded within the client. Setup is invoked when a new object is added. For now I don't truly need to do anything in either, but in the future I would use the initialise method to load any information needed for the user (maybe I'd fetch the last extraction path from the registry, a bunch of configuration data from somewhere in CM, etc).

#region Initialization public override void Initialise(Database db) { } public override void Setup(TrimMainObject newObject) { } #endregion

Next I need to tackle the external link region. There are two types of methods in this region: ones that deal with the display of menu links and the others that actually perform an action. My starting code is shown below.

#region External Link public override TrimMenuLink[] GetMenuLinks() { return null; } public override bool IsMenuItemEnabled(int cmdId, TrimMainObject forObject) { return false; } public override void ExecuteLink(int cmdId, TrimMainObject forObject, ref bool itemWasChanged) { } public override void ExecuteLink(int cmdId, TrimMainObjectSearch forTaggedObjects) { } #endregion

First I'll tackle the menu links. The TrimMenuLink class, shown below, is marked as abstract. This means I need to create my own concrete class deriving from it.

Note that the constructor is marked protected. Thankfully, because of that, I can eventually do some creative things with MenuLink (maybe another blog post someday). For now I'll just add a class to my project named "ExportRecordMenuLink". I apply the same process to it once it's generated, giving me the results below.

using HP.HPTRIM.SDK; namespace CMRamble.Addin.Record.Export { public class ExportRecordMenuLink : TrimMenuLink { public override int MenuID => 8001; public override string Name => "Export Record"; public override string Description => "Exports records to disk using Record Number as file name"; public override bool SupportsTagged => true; } }

Now that I've got a Menu Link for my add-in, I go back and to my main class and make a few adjustments. First I might as well create a private member to store an array of menu links. Then I go into the intialise method and assign it a new array (one that contains my new addin). Last, I have the GetMenuLinks method return that array.

private TrimMenuLink[] links; public override void Initialise(Database db) { links = new TrimMenuLink[1] { new ExportRecordMenuLink() }; } public override TrimMenuLink[] GetMenuLinks() { return links; }

The IsMenuItemEnabled method will be invoked each time a record is "selected" within the client. For my scenario I want to evaluate if the object is a record and if it has an electronic document attached. Though I also need to ensure the command ID matches the one I've created in the ExportRecordMenuLink.

public override bool IsMenuItemEnabled(int cmdId, TrimMainObject forObject) { return (links[0].MenuID == cmdId && forObject.TrimType == BaseObjectTypes.Record && ((HP.HPTRIM.SDK.Record)forObject).IsElectronic); }

Almost done! There are two methods left to implement, both of which are named "ExecuteLink". The first deals with the invocation of the add-in on one object. The second deals with the invocation of the add-in with a collection of objects. I'm not going to waste time doing fancy class design and appropriate refactoring.... so pardon my code.

public override void ExecuteLink(int cmdId, TrimMainObject forObject, ref bool itemWasChanged) { HP.HPTRIM.SDK.Record record = forObject as HP.HPTRIM.SDK.Record; if ( (HP.HPTRIM.SDK.Record)record != null && links[0].MenuID == cmdId ) { FolderBrowserDialog directorySelector = new FolderBrowserDialog() { Description = "Select a directory to place the electronic documents", ShowNewFolderButton = true }; if (directorySelector.ShowDialog() == DialogResult.OK) { string outputPath = Path.Combine(directorySelector.SelectedPath, $"{record.Number}.{record.Extension}"); record.GetDocument(outputPath, false, string.Empty, string.Empty); } } }

In the code above I prompt the user for the destination directory (where the files should be placed). Then I formulate the output path and extract the document. I should be removing any invalid characters from the record number (slashes are acceptable in the number but not on the disk), but again you can do that in your own implementation.

I repeat the process for the next method and end up with the code shown below.

public override void ExecuteLink(int cmdId, TrimMainObjectSearch forTaggedObjects) { if ( links[0].MenuID == cmdId ) { FolderBrowserDialog directorySelector = new FolderBrowserDialog() { Description = "Select a directory to place the electronic documents", ShowNewFolderButton = true }; if (directorySelector.ShowDialog() == DialogResult.OK) { foreach (var taggedObject in forTaggedObjects) { HP.HPTRIM.SDK.Record record = taggedObject as HP.HPTRIM.SDK.Record; if ((HP.HPTRIM.SDK.Record)record != null) { string outputPath = Path.Combine(directorySelector.SelectedPath, $"{record.Number}.{record.Extension}"); record.GetDocument(outputPath, false, string.Empty, string.Empty); } } } } }

All done! Now, again, I left out the meta-data file & user interface for now. If anyone is interest then add a comment and I'll create another post. For now I'll compile this and add it to my instance of Content Manager.

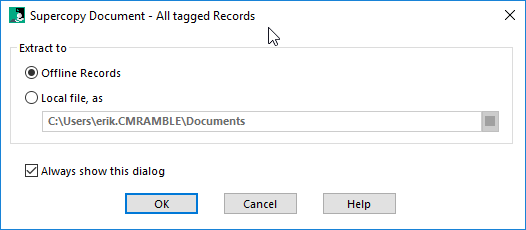

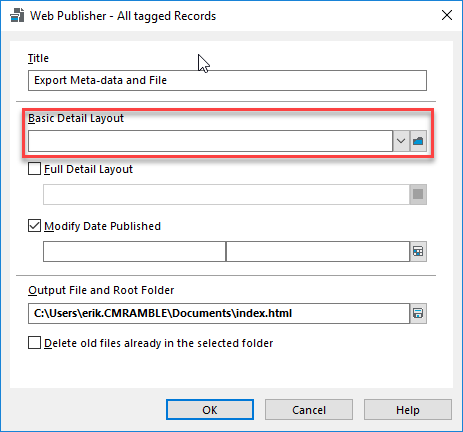

Here's what it looks like so far for the end-user.

A wise admin would encourage users to place this on a ribbon custom group, like shown below.

When I execute the add-in on one record I get prompted for where to place it...

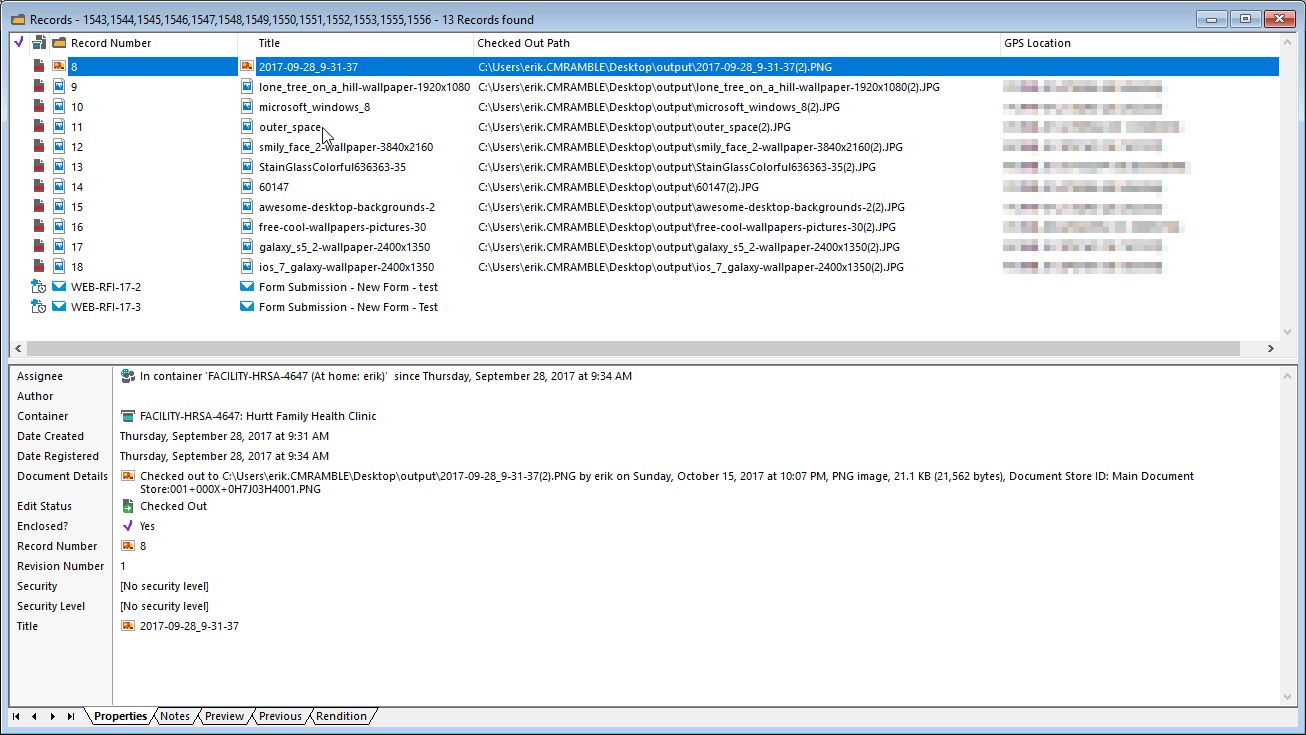

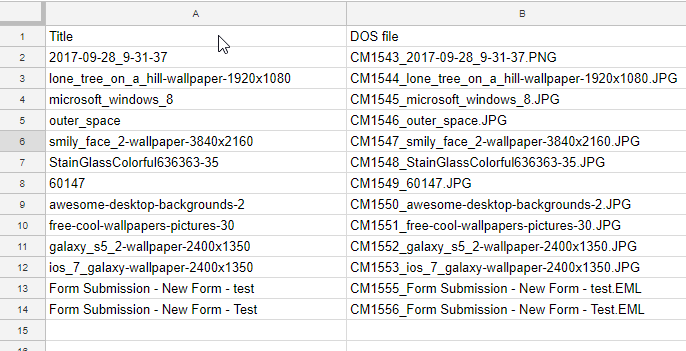

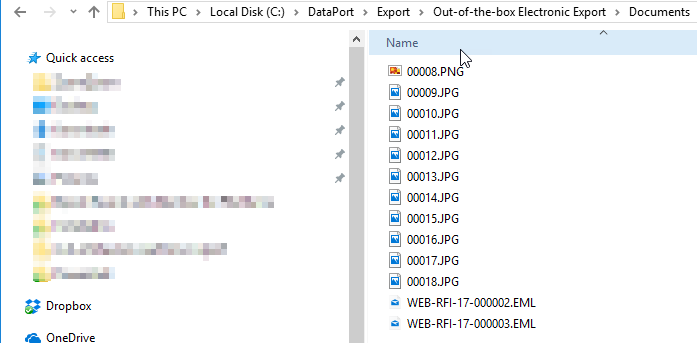

Success! It gave me my electronic document with the correct file name.

Now if I try it after tagging all the records, the exact same pop-up appears and all my documents are extracted properly.

Hopefully with this post I've shown how easy it is to create custom add-ins. These add-ins don't necessarily need to be deployed to everyone, but often times that is the case. That's the main reason people shy away from them. But deploying these is no where near as complicated as most make it seem.

You can download the full source here.